KI eröffnet unendliche Möglichkeiten. Für HR-Teams kann dies die globale Compliance vereinfachen. Aber ein falscher Schritt und Sie können sich in Rechtsstreitigkeiten und einem beschädigten Ruf wiederfinden.

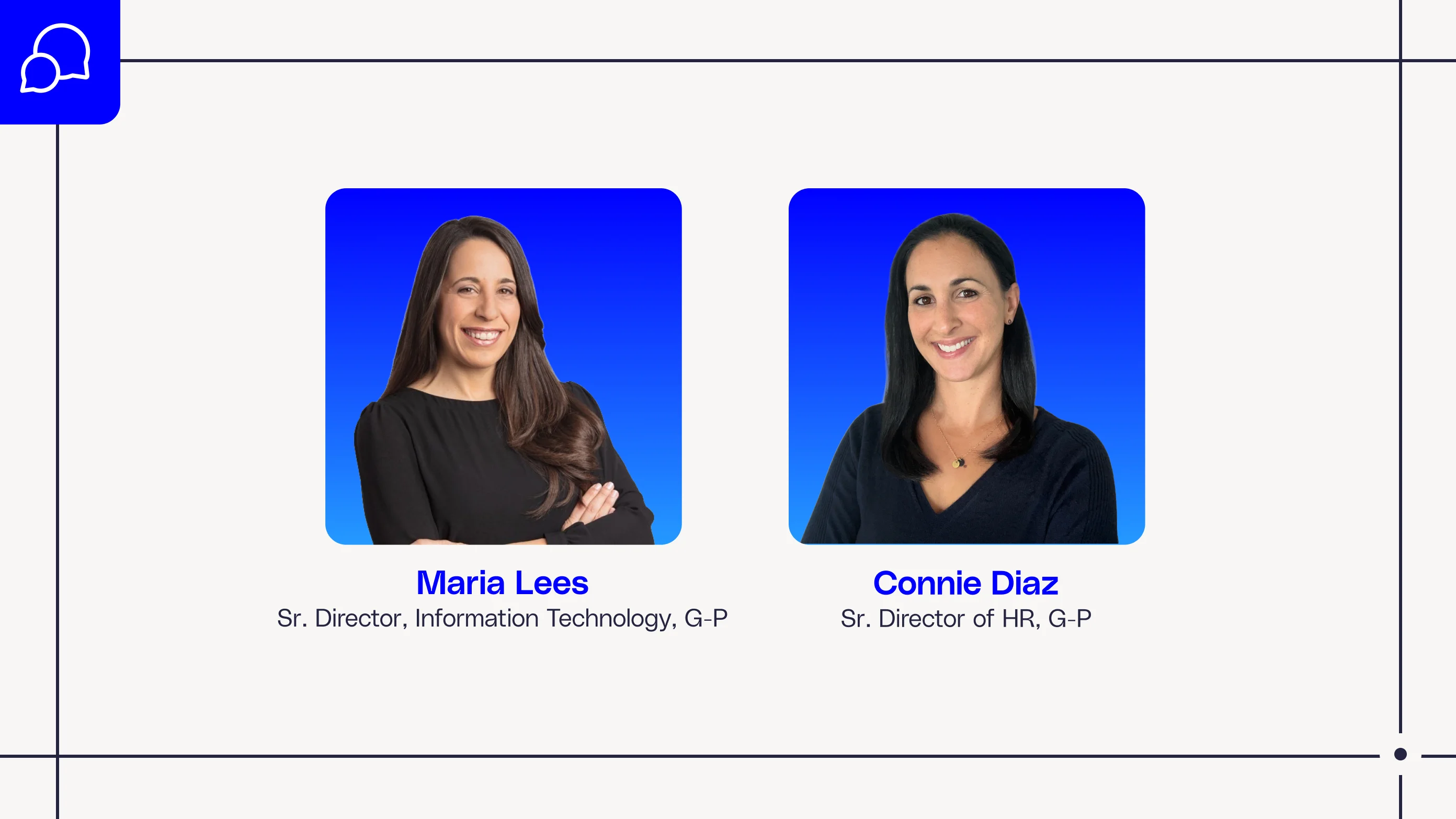

IT-Führungskräfte überall krempeln ihre Ärmel hoch und gehen die Einführung von KI direkt an. In einem kürzlichen Webinar teilte Maria Lees, Senior Director of IT bei G-P, mit, wie HR-Teams KI integrieren und Bedenken hinsichtlich Datensicherheit, Voreingenommenheit und Datenschutz lindern können.

Lees kam im Jahr 2023 zu G-P, kurz nachdem wir mit dem Aufbau unseres KI-gestützten globalen HR-Agenten G-P Gia™ begonnen hatten. Gia ist eine agentische KI für HR, die Kosten und Zeit der Compliance um bis zu 95% reduzieren kann. Lees weiß also ein oder zwei Dinge über die Einführung von KI, die sie auf eine entscheidende Sache beschränkt: Datenintegrität.

„Man kann die grundlegenden Schritte nicht überspringen“, sagt Lees. „Ein Teil unserer Rolle als IT-Führungskräfte ist es, Führungskräften zu helfen, diese Reise zu verstehen. Eine Führungskraft könnte nach einem agentischen KI-Tool fragen, das komplexe Entscheidungen allein treffen kann, und obwohl das ein großartiges Ziel ist und ich es liebe, ist es nicht unbedingt realistisch, ohne diese Grundlage zuerst dorthin zu gelangen. Und um diese Grundlage aufzubauen, besteht der erste Schritt darin, Vertrauen in Ihre Daten aufzubauen, und Ihre Daten sind nur so gut wie die Informationen, die Sie sie füttern. Wenn er also isoliert ist, ist er ungenau oder chaotisch – was auch immer du darauf aufbauen willst, wird völlig fehlerhaft sein.“

Technisches Vertrauen: Überbrücken der 3 %-Lücke

Lees glaubt fest an „Vertrauen durch Transparenz“. Im KI-Zeitalter müssen Unternehmen von diesem Mantra verankert sein und bereit sein, die Quellen zu zeigen und das „Warum“ hinter jeder KI-Empfehlung zu erklären. Dies ist die Grundlage für Produktglaubwürdigkeit und Benutzervertrauen.

„Vertrauen und Transparenz sind etwas, das wir uns bei der Entwicklung unserer eigenen KI zu Herzen genommen haben“, erklärt Lees. „Wir wussten, dass jeder, der den Antworten von Gia vertrauen kann, auf seine Grundlage vertrauen müsste. Und sie basiert auf einer Menge Erfahrung, einem Jahrzehnt der globalen Expertise von G-P. Sein Wissen ist nicht zufällig. Sie umfasst eine Million realer Szenarien und über 100,000 rechtsgeprüfte Artikel und Daten aus über 1,500 Regierungsquellen.“

Wenn Benutzer Gia eine Frage stellen, wird die Ausgabe immer von G-P Verified Sources begleitet, was bedeutet, dass G-P-Experten die Informationen validiert haben. Gia wurde für unübertroffene Präzision entwickelt, und das Endprodukt ist zum Patent angemeldete KI in Kombination mit einem proprietären RAG-Modell, das Ergebnisse liefert, die 10-mal besser sind als der KI-Branchenstandard.

Trotz der deutlichen Fortschritte in der KI ist es für viele IT-Führungskräfte immer noch eine ständige Herausforderung, unternehmensweites Vertrauen in die Tools zu gewinnen. Der Bericht „World at Work“ 2025 ergab, dass nur 3 % der Führungskräfte KI vertrauen würden, um eine Entscheidung zu treffen. IT-Abteilungen müssen Führungskräften helfen, sich mit der Einführung von KI-Technologien wohl zu fühlen.

Mit Lees Worten: „Das 3% ist wirklich aussagekräftig, und es macht im Moment vollkommen Sinn. Es gibt viele Unbekannte. Und es gibt einen Mangel an Wissen und Verständnis, und alle sind in einem Rennen, um irgendwohin zu kommen. Aber es hebt wirklich eine natürliche Vertrauenslücke hervor. Und es zeigt, dass es bei unserer Herausforderung als Führungskräfte nicht nur darum geht, Technologie zu implementieren, sondern vielmehr darum, das Vertrauen in sie aufzubauen. Ich empfehle Ihnen daher, es wie ein neues, unglaublich intelligentes Teammitglied zu betrachten. Man muss inkrementell Vertrauen aufbauen.“

Das Human-in-the-Loop-Validierungs-Framework

Nicht alle KI-Tools sind gleich. Und nicht jeder kann seine eigenen Lösungen entwickeln, daher benötigen Unternehmen einen starken Überprüfungsprozess für Tools von Drittanbietern. Wie können IT-Teams also KI-Tools bewerten, bevor sie sie einführen? „Diese Frage steht wirklich im Mittelpunkt unserer Philosophie hier bei G-P“, sagt Lees.

Lees nutzt ihre Arbeitsbeziehung mit Connie Diaz, Senior Director of HR bei G-P, um zu zeigen, wie IT-Teams neue KI-Technologie in ihr Unternehmen einführen. „Wenn also ein Team, insbesondere die Personalabteilung, ein neues Tool einführen möchte, ist die [IT]-Rolle meines Teams nicht am Ende des Prozesses ein Gatekeeper. Es ist mehr, von Anfang an ein strategischer Partner zu sein. Es ist eine echte funktionsübergreifende Zusammenarbeit, die wir übernehmen“, sagt sie.

Die IT verfolgt einen Human-in-the-Loop-Ansatz, um jedes Tool zu validieren, bei dem funktionsübergreifendes Fachwissen Compliance und Vertrauen gewährleistet. Das technische Team bewertet die Ausrichtung auf technische Standards, die IT analysiert Sicherheitsrisiken, rechtliche Prüfungen auf globale Compliance, der KI-Rat garantiert die Ausrichtung der Governance und die Personalabteilung gibt den kritischen geschäftlichen und ethischen Fall.

„Die Betrachtung als gemeinsame Anstrengung hilft, diese Vertrauensgrundlage aufzubauen“, stimmt Diaz zu. „Als Arbeitgeber oder Führungskraft ist ein KI-Tool wie Gia nicht nur eine Black Box, sondern ein Tool, das sowohl von HR- als auch von IT-Experten aktiv und transparent überwacht wird. Die Menschen vertrauen viel eher den Ergebnissen, die sie daraus ziehen.“

Die Personalabteilung gibt die Gewissheit, dass das Tool ethisch korrekt und fair eingesetzt wird, während die IT das Vertrauen gibt, dass es sicher und konform ist. Es ist eine gemeinsame Verantwortung, die ein Engagement für die Integrität von Menschen und Daten demonstriert.

Beginnen Sie mit einer sauberen Datenquelle

Der Zeitplan für die Einführung eines neuen KI-Tools kann Wochen bis Monate dauern. Dies hängt davon ab, ob Sie Daten haben, die sofort in einem KI-Modell verwendet werden können, oder ob Ihre Daten unvollständig sind und in inkompatiblen Formaten gespeichert sind, die die IT extrahieren muss. Lees empfiehlt, klein anzufangen. „Versuchen Sie nicht, den Ozean zu kochen. Wählen Sie einfach einen Ort aus, den Sie angehen können, und gewinnen Sie schnell daraus. Beginnen Sie, Ihre Dynamik von dort aus aufzubauen“, sagt sie.

Die großen Fragen beziehen sich im Allgemeinen auf den Datenschutz.

Erstens: Können die Daten des Unternehmens verwendet werden, um das KI-Modell zu trainieren?

Zweitens: Wie kann die IT strenge Zugriffskontrollen einrichten, damit nur die richtigen Personen die richtigen Informationen sehen?

„Da wir all diese kritischen Probleme gemeinsam und im Voraus angehen, geht es im Gespräch nicht darum, Probleme aufzulisten. Es geht darum, die tatsächlichen Lösungen zu finden“, sagt Lees. „Wenn wir diesen Prozess befolgen, ist dies eine Win-Win-Situation für alle. Wir können zuversichtlich ein KI-Tool genehmigen, das dem Unternehmen helfen wird ... es ist ein Prozess, den man als Hürde betrachten könnte, aber wir haben das in eine starke Partnerschaft verwandelt, um sicherzustellen, dass wir G-P und unsere Daten schützen.“

IT und HR: Partner in der Datenbereitschaft

Für Teams, die ihre KI-Reise beginnen, betonte Lees die Bedeutung der Vorbereitung. Die Auswahl eines KI-Modells auf unvollständigen Daten wird zu schlechten Ergebnissen führen, die das Vertrauen schädigen, das Sie in Ihrem Unternehmen aufbauen möchten. Lees rät, dass die Datengrundlage der erste Kontrollpunkt sein muss, bevor ein Team ein Tool auswählt. Und hier wird eine enge Partnerschaft zwischen HR und IT so wichtig.

Erstens kann die IT die Personalabteilung durch Bereinigung und Zentralisierung der Daten unterstützen. Das bedeutet, dass Informationen aus verschiedenen Systemen, wie z. B. Gehaltsabrechnung, Sozialleistungen und Leistungsüberprüfungen, in einer einzigen Quelle zusammengefasst werden. Als nächstes kann die IT der Personalabteilung helfen, eine Data Governance einzurichten und starke Zugriffskontrollen einzurichten, um sensible Mitarbeiterdaten zu schützen.

Die Erstellung klarer Regeln für die Art und Weise, wie Daten erfasst und verwendet werden, ist entscheidend für Genauigkeit und Datenschutz.

Reduzieren Sie mit Gia Compliance-Kosten und -Zeit um bis zu 95 %

Lassen Sie Ihr HR-Team nicht durch Compliance-Hürden bremsen. Ein fachkundig geprüftes KI-Tool kann die Dynamik beschleunigen und die hohe Messlatte Ihres IT-Teams für Datensicherheit, ethische Transparenz und überprüfbare Compliance erfüllen. Gia ist dieses Tool.

Gia wurde vom HR Executive als Top HR Product 2025 ausgezeichnet. Die agentische KI wurde entwickelt, um die globale Personalabteilung zu rationalisieren, indem sie Ihre schwierigsten Compliance-Fragen in 50 Ländern und allen 50 US-Bundesstaaten beantwortet.

Keine rechtlichen Hürden mehr oder kostspielige abrechenbare Stunden. Mit Gia ist die globale Compliance einfach. Möchten Sie die globale Personalabteilung mit fachkundiger Anleitung vereinfachen, der Sie vertrauen können? Melden Sie sich noch heute für eine kostenlose Testversion an.

Um mehr von Maria Lees darüber zu erfahren, wie die IT erfolgreich mit der Personalabteilung zusammenarbeiten kann, sehen Sie sich ihr vollständiges Gespräch mit der Kollegin Connie Diaz an.